Unleashing the Power of LLMs, OpenAI API, and LangChain for Personalized City-Specific Recipe Recommendations

Langchain | OpenAI | Prompt |Prompt Template | Python | maxtekAI

Cooking is an art, and having a knowledgeable cooking assistant can greatly enhance your culinary journey. In this blog post, we will explore how the combination of LLMs (Large Language Models) the OpenAI API, and LangChain can be leveraged to build an intelligent Cook Bot. This bot will provide recipes based on the location provided by the user, cooking tips, and even real-time translation for global culinary adventures.

Table of contents

Introduction

Setting up the Environment Variables for OpenAI API

Installing and loading dependencies

Creating a new Python script

Invoking the method to load the environment variable and creating a new instance of the language model

Creating Location and Meal Chains

Building the Overall Chain

Streamlit User Interface

Conclusion

- Introduction

Cooking is an art that brings people together through delicious flavors and culinary experiences. Before delving into the code to create a cutting-edge cook assistant that will elevate your cooking skills to new heights we are going to play around with some keywords which are not common to non-geeks but key to the implementation of the cook assistant namely OpenAI, Langchain, LLMs, Prompt and Prompt template.

OpenAI : OpenAI is a leading artificial intelligence research organization that has developed state-of-the-art language models capable of understanding and generating human-like text. In the context of our cook assistant, OpenAI's technology is utilized to power the language generation capabilities of the assistant. The cooking assistant relies on an OpenAI language model, specifically the OpenAI API, to generate responses to user inputs. The language model has been trained on vast amounts of text data, allowing it to understand the nuances of human language and provide contextually relevant and coherent responses. By integrating the OpenAI API into the cook assistant, we leverage the power of natural language processing and generation. The cooking assistant can understand and process user queries related to cooking, recipe recommendations, and cooking tips. It then utilizes the OpenAI language model to generate informative and engaging responses conversationally.

Langchain: Python library that provides an easy-to-use interface for building conversational agents using large language models (LLMs). In the context of the cook assistant, Langchain is used to build a conversational interface that allows users to ask for cooking advice and recipe recommendations.

At the core of Langchain is the concept of a "chain," which is essentially a sequence of LLMs that are used to generate responses to user inputs. In the cook assistant, Langchain is used to create two separate chains: one for location-based recipe recommendations and another for meal-specific recipe recommendations.

LLMs(Large Language Models) in the realm of the cooking assistant are like culinary encyclopedias on steroids. These linguistic powerhouses possess a vast understanding of recipes, ingredients, cooking techniques, and culinary knowledge. LLMs are trained on massive amounts of text data, allowing them to absorb a wealth of culinary information. When it comes to cooking assistance, LLMs serve as the brilliant minds behind the scenes. They can comprehend and generate text-based responses to user queries, providing valuable cooking advice, recipe suggestions, and even personalized recommendations.

Prompt: In the context of LLMs and the Cook Assistant, a prompt refers to the initial instruction or input provided to the language model to generate a response. The prompt serves as a guiding context for the model, helping it understand the user's request and generate relevant and meaningful output. When interacting with the Cook Assistant, the user provides prompts related to the city. For example, a user might ask, "What is a delicious recipe for a particular city?" In this case, the prompt is the user's question itself. The prompt is then passed to the LLM, such as OpenAI's GPT-3 model, which processes the input and generates a response based on its understanding of the given context. The model analyzes the prompt and utilizes its vast knowledge of cooking techniques, ingredients, and recipes to generate a helpful and informative response to the user's query.

Prompt template: In the context of Large Language Models (LLMs) and the Cook Assistant, a prompt template is a structured framework that incorporates variables and placeholders to create dynamic prompts. Prompt templates allow for flexible and customizable input that can be easily adapted to different user queries and contexts. The Cook Assistant utilizes prompt templates to generate prompts tailored to specific user inputs, such as location or meal preferences. Instead of providing a static prompt, a prompt template includes placeholders that will be replaced with actual values provided by the user. For example, a prompt template for location-based recommendations might look like this: "Tell me the best food in {user_location}." Here,

{user_location}is the placeholder that will be replaced with the actual user input, such as "New York" or "Paris." The prompt template allows the Cook Assistant to dynamically generate prompts based on the user's location, ensuring relevant and personalized responses. By employing prompt templates, the Cook Assistant can handle various user inputs and adapt its prompts accordingly. Prompt templates enable a more interactive and conversational experience, allowing users to provide specific details or preferences and receive targeted recommendations or recipes in response. In closing, prompt templates enhance the flexibility and interactivity of the Cook Assistant, enabling users to engage in meaningful conversations and receive customized cooking assistance based on their specific needs and preferences.Setting up the Environment Variables for OpenAI API

Before we start building the Cook Bot, we need to set up the environment variables for the OpenAI API. The API key is a sensitive piece of information that should not be hard-coded into your codebase. Instead, it should be stored as an environment variable. Here are the steps to set up the environment variable:

Log in to the OpenAI dashboard and copy your API key.

Open the terminal and navigate to the project directory.

Create a project directory using

sudo mkdir cookAssistantand go into that directory usingcd cookAssistantthen create a new environment file usingsudo nano .envAdd the following line to the .env file

OPENAI_API_KEY = "your api key here"

- Installing and loading dependencies

In this section, we are going to install the required dependencies using pip and import them into our Python script

pip install openai langchain streamlit streamlit_chat dotenv

Creating a new Python script using

nano app.pyin the projectcookAssistantdirectory where the .env file is and paste the following code as shown step by step.from langchain.llms import OpenAI from langchain.chains import LLMChain from langchain.prompts import PromptTemplate from langchain.chains import SimpleSequentialChain import streamlit as st from streamlit_chat import message from langchain.chains import ConversationChain from langchain.llms import OpenAI from dotenv import load_dotenv import osInvoking the method to load the environment variable and creating a new instance of the language model

load_dotenv()

token = os.environ.get("OPENAI-API-KEY")

llm = OpenAI(temperature=1, openai_api_key=token)

In the above code, the load_dotenv() function loads the environment variables from the .env file. The token variable retrieves the OpenAI API key stored in the openai-key environment variable. Next, an instance of the OpenAI class is created with the provided API key (token) and a temperature of 1. The temperature parameter determines the randomness of the responses generated by the model.

- Creating Location and Meal Chains

To provide location-based recommendations and meal-specific recipes, we create separate chains for location and meal inputs. We define prompt templates that incorporate the user's location and desired meal to generate contextually relevant responses. By utilizing LLMChain and PromptTemplate from LangChain, we establish the foundation for our cook assistant's knowledge and response generation as shown below.

def load_chain_loc():

template = """Your job is to come up with a classic dish from the area that the users suggests.

% USER LOCATION

{user_location}

YOUR RESPONSE:

"""

prompt_template = PromptTemplate(input_variables=["user_location"], template=template)

location_chain = LLMChain(llm=llm, prompt=prompt_template)

return location_chain

In the above code, The load_chain_loc function defines a template for generating prompts based on the user's location input. It uses the PromptTemplate class to create a template with the variable {user_location}. An instance of the LLMChain class is created, passing the OpenAI instance (llm) and the prompt template (prompt_template). The function returns the location_chain instance.

def load_chain_meal():

template = """Given a meal, give a short and simple recipe on how to make that dish at home.

% MEAL

{user_meal}

YOUR RESPONSE:

"""

prompt_template = PromptTemplate(input_variables=["user_meal"], template=template)

meal_chain = LLMChain(llm=llm, prompt=prompt_template)

return meal_chain

In the above code, The load_chain_meal function defines a template for generating prompts based on the user's meal input. It uses the PromptTemplate class to create a template with the variable {user_meal}. An instance of the LLMChain class is created, passing the OpenAI instance (llm) and the prompt template (prompt_template). The function returns the meal_chain instance.

- Building the Overall Chain

To integrate the location and meal chains, we create an overall chain using SimpleSequentialChain. This allows us to connect the chains and execute them sequentially, ensuring a smooth flow of information and generating cohesive responses. We configure the overall chain to provide verbose output for debugging and testing purposes.

loc_chain = load_chain_loc()

chain_meal = load_chain_meal()

overall_chain = SimpleSequentialChain(chains=[loc_chain,chain_meal], verbose=True)

In the above code, the load_chain_loc function is called to create the loc_chain instance. The load_chain_meal function is called to create the chain_meal instance. Finally, an instance of the SimpleSequentialChain class is created, passing the loc_chain and chain_meal instances as a list.

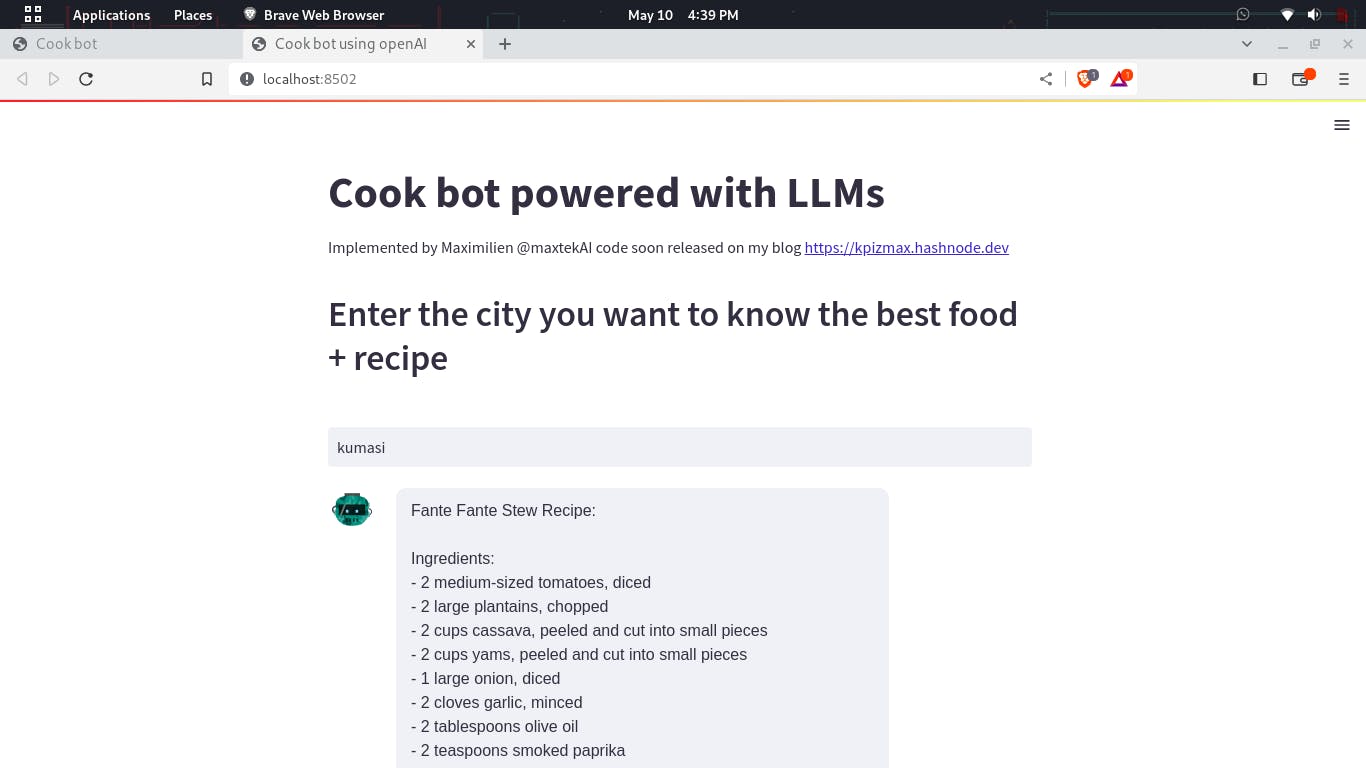

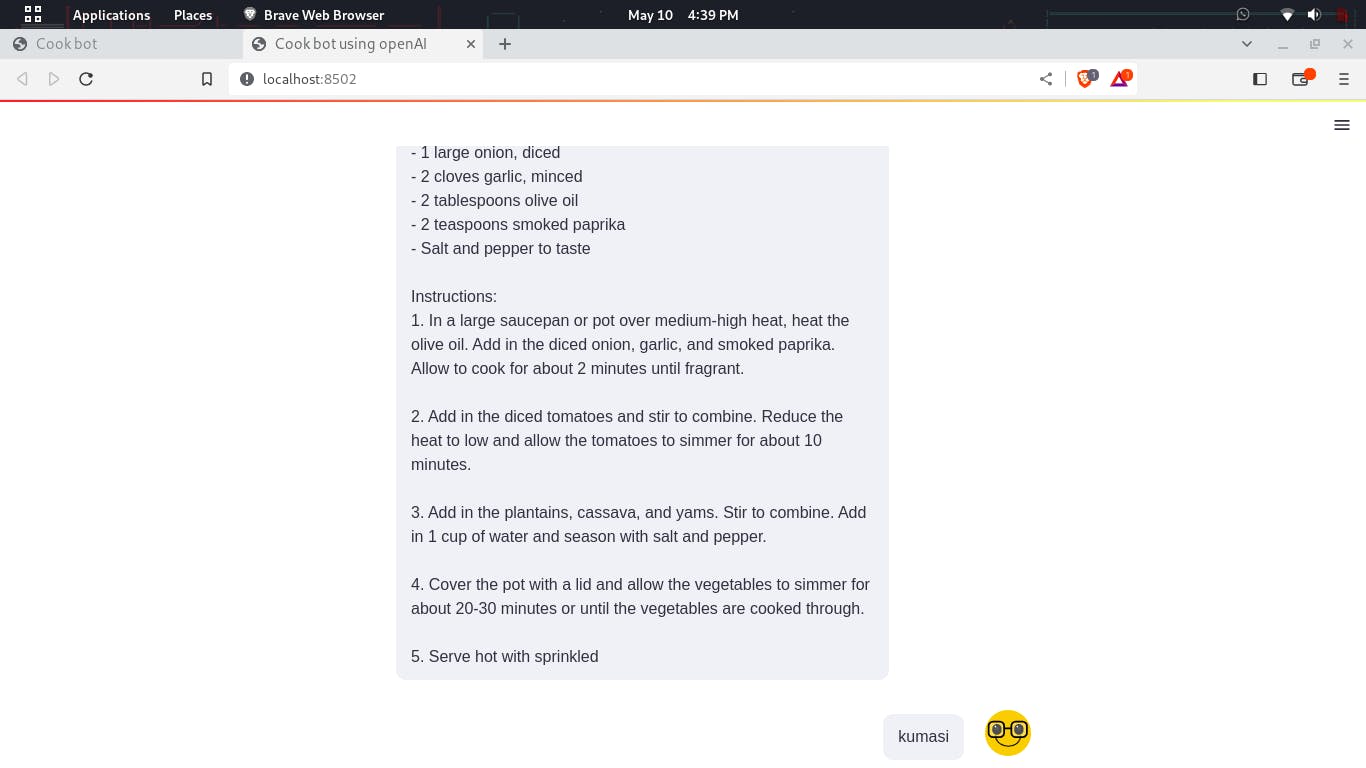

Streamlit User Interface

In this section, we implement the user interface using Streamlit. We set the page configuration, display the cook assistant's title, and prompt the user to enter the desired city for location-based food recommendations. As the user inputs their preferences, the cook assistant generates responses using the overall chain, and the conversation history is displayed using the Streamlit Chat component as shown below

st.set_page_config(page_title=" Cook bot", page_icon=":robot:")

st.title("Cook bot powered with LLMs")

st.write("By Maximilien ")

if "generated" not in st.session_state:

st.session_state["generated"] = []

if "past" not in st.session_state:

st.session_state["past"] = []

def get_text():

st.header("enter the city you want to know the best food")

input_text = st.text_input("", key="input")

return input_text

user_input = get_text()

if user_input:

output = overall_chain.run(input=user_input)

st.session_state.past.append(user_input)

st.session_state.generated.append(output)

st.write(output)

if st.session_state["generated"]:

for i in range(len(st.session_state["generated"]) - 1, -1, -1):

message(st.session_state["generated"][i], key=str(i))

message(st.session_state["past"][i], is_user=True, key=str(i) + "_user")

Let's break down this part of the code step by step:

st.set_page_config(page_title=" Cook bot", page_icon=":robot:"): This line configures the page settings for the Streamlit application. It sets the page title as "Cook bot" and assigns a robot icon to the page.st.title("Cook bot powered with LLMs"): This line displays a title on the Streamlit app interface, indicating that it is a Cook bot powered by LLMs.st.write("By Maximilien "): This line displays the name or attribution "By Maximilien" on the Streamlit app interface.if "generated" not in st.session_state: st.session_state["generated"] = []: This code block checks if the "generated" key is present in the Streamlit session state. If not, it initializes an empty list assigned to the "generated" key. This list will store the generated responses.if "past" not in st.session_state: st.session_state["past"] = []: Similar to the previous code block, this block checks if the "past" key is present in the Streamlit session state. If not, it initializes an empty list assigned to the "past" key. This list will store the past user inputs.def get_text(): ...: This is a function definition forget_text(). It displays a header asking the user to enter the city for which they want to know the best food. It then usesst.text_input()to retrieve the user's input as a text string.user_input = get_text(): This line calls theget_text()function and assigns the returned user input to theuser_inputvariable.if user_input: ...: This code block checks if theuser_inputvariable has a non-empty value. If there is user input, it proceeds with the following steps.output = overall_chain.run(input=user_input): This line executes therun()method of theoverall_chainobject, passing the user input as theinputargument. It generates a response based on the user input using the chained LLMs.st.session_state.past.append(user_input): This appends the user input to the "past" list stored in the Streamlit session state.st.session_state.generated.append(output): This appends the generated output to the "generated" list stored in the Streamlit session state.st.write(output): This line displays the generated output on the Streamlit app interface.if st.session_state["generated"]: ...: This code block checks if the "generated" list in the Streamlit session state is not empty. If it is not empty, it proceeds with the following steps.for i in range(len(st.session_state["generated"]) - 1, -1, -1): ...: This loop iterates over the "generated" list in reverse order using therange()function. It retrieves each generated response and its corresponding user input from the Streamlit session state.message(st.session_state["generated"][i], key=str(i)): This line displays the generated response as a message on the Streamlit app interface, using themessage()function. Each message is assigned a unique key based on the loop index.message(st.session_state["past"][i], is_user=True, key=str(i) + "_user"): This line displays the corresponding user input as a user message (indicating that it was input by the user) on the Streamlit app interface. Each user message is also.- Conclusion

With the implementation of the cook assistant using LLMs, the OpenAI API, and LangChain, we have harnessed the power of language models and intelligent conversation chains to create a valuable tool for cooking enthusiasts. The cooking assistant provides personalized recommendations and recipes based on user inputs, empowering users to explore new cuisines and enhance their culinary skills. By combining advanced technologies, we are revolutionizing the cooking experience and paving the way for future innovations in the realm of intelligent kitchen assistants.

The source code can be found on my github repository here

If you want to contribute or you find any errors in this article please do leave me a comment.

You can reach out to me on any of the matrix decentralized servers. My element messenger ID is @maximilien:matrix.org

If you are in one of the mastodon decentralized servers, here is my ID @maximilien@qoto.org

If you are on linkedIn, you can reach me here

If you want to contact me via email for freelance maximilien@tutanota.de

If you want to hire me to work on machine learning, data science, IoT and AI related projects, please reach out to me here

Warm regards,

Maximilien.